Overview

In this lab, you will use Azure Machine Learning to train, evaluate, and publish a Machine Learning model. This lab intends to be a gentle, guided introduction to core ML concepts. After completing this lab, you will have enough familiarity with the platform and basic ML concepts to continue exploring on your own.

I plan to upload videos of these demos on the playfullySerious YouTube channel. If interested, you can subscribe so you will get notified when they are up. They can help you practice later on.

Pre requisites

To complete this lab, you will need the following:

- A Microsoft account

- Go to login.live.com and using any personal email ID you have to set up one. I use my gmail to do this.

- A web browser and Internet connection

- Data files used in this lab are available at google drive link. Download them.

- Visit login.azureml.net OR studio.azureml.net to get started

- Once logged in, click on Datasets -> + New -> Upload the datasets downloaded.

Experiment 1: Simple Linear Regression

Click on Experiments, then click +NEW, then select Blank Experiment to create one. It will have a name like “Experiment Created on…” at the top. Click on that and replace it with a name like my_regression_experiment.

Expand the “Saved Datasets” and then from under My Datasets menu, select and drag the dataset simple linear regression.csv to the top box in the canvas.

Add modules from the available set to create the following experiment flow.

Save and Run the experiment. Click on the output port of Evaluate Model and observe the following results:

The experiment workflow is shown in the picture above. The linear regression model is -1.1 + 2.3 X. Using this model, Scored Labels or predicted values are arrived at. For e.g. for row 1, Scored Label is -1.1 + 2.3 * 1 = 1.2.

The model evaluation metrics show R Square or Coefficient of determination of 0.96. These values are also seen from the Regression Line added in Excel scatter plot.

Recap of key take aways from the Experiment 1:

- How to add your own dataset to Azure ML repository

- How to create a new experiment and observe the model performance metrics

- Basic terminology associated with ML: Features, Label, Regression, Linear Regression, Regression Model evaluation metrics

Experiment 2: Decision Tree Regression

This is a toy experiment to demonstrate how decision tree algorithm works by partitioning dataset. The real_estate dataset was synthetically prepared to help get intuitive understanding of this popular ML algorithm.

Create a new experiment using steps learned in the previous lab to achieve this workflow:

The fitted decision tree model

The figure above has three parts. First one shows a subset of a full Experiment. We use the Decision Forest Module to create a single decision tree. The Decision Forest Regression module settings are shown above. To the right of that, the fitted decision tree model is shown. As expected, it has partitioned the dataset on city tier and then using no of rooms. The lowest set of nodes in the tree (called leaf nodes) has the predictions. So for tier 1 city and 2 rooms this tree will predict 11.61. Similarly, a tier 2 city and 3 room property will get a prediction of 6.843.

Key take aways from lab 2:

- This lab was meant to be a repeat of creating an experiment, adding some of the basic modules like algorithm and train module.

- Intuition for how decision tree algorithms partition datasets and make predictions

- That for a same regression problem, we can try linear regression, decision forest regression or other such modules available.

These have prepared the ground for a more complex exercise in Lab 3 which will take us much closer to real life like scenario. California, here we come!

Experiment 3: California House Price Prediction

This experiment builds on the basic awareness of how Azure ML experiments are created. This lab will focus on several ML concepts.

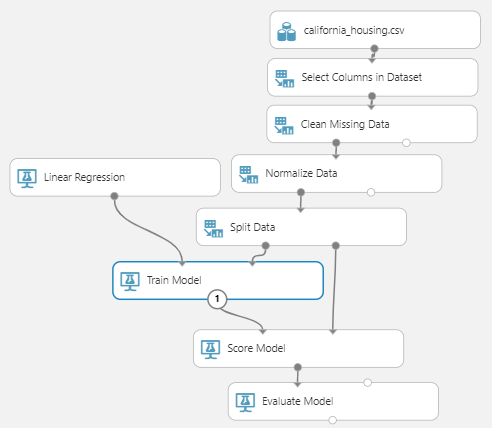

Create the following new experiment workflow:

Create a new experiment, name it as CaliforniaHousingLinearRegression. Drag and drop the dataset california_housing.csv.

The select columns module helps select / deselect columns. In this case, select all except latitude and longitude.

Clean missing data module is a core step in ML. Various options are available. Leave other value as such and just select Remove entire row option.

Normalize Data is used to make all the features have comparable magnitudes so scale of measurement does not distort the model. Select MinMax method and select all feature columns. Label or Target column should be excluded.

Split data into 80:20. The 80% portion is called Train Set. It is used to learn the model. The 20% is called Test. It is used to evaluate the model. It represents the future data which the model has not seen yet. And we know the correct target values in these 20% rows.

In the Linear Regression module, make L2 field as 0.

In the Train model step, retain Ordinary Least Squares; select the target column, i.e. the label column we are interested in predicting. This algorithm uses Sum of Squares of Errors as the cost function and will try to minimize it. For regression algorithms this is common.

Add the score and evaluate modules as before. Note how the flows from split are connected.

Save and Run this experiment. Click on the Train Model module and visualize. The linear regression model is:

Predicted Price = 0.058 + 34.5129 * AveBedrms – 32.5352 * AveRooms + 7.899 * MedInc … + 0.53167 * Population

The model performance metrics are :

This shows R Square or Coefficient of Determination of 0.516, RMSE of 0.8 etc. We have a basic model that we can try to improve upon as we use other algorithms.

It is common in ML to try a set of algorithms and then also tune each of the models obtained from each algorithm. Let us explore Decision Forest Regression, this time with more than one tree.

Use “Save As” and make a copy of this experiment. Name the new experiment as CaliforniaHousingDecisionForestRegression. Simply replace the Linear Regression module with Decision Forest Regression module and use the following settings:

Resampling Method: Bagging, Create trainer mode: Single parameter, Number of decision trees 5, Max depth 5. Other parameters can be retained as such. Now Save and Run the experiment.

Click on Visualize in the output of the Train Model module and see 5 different trees. This algorithm created 5 trees, and outputs the average of predictions by each tree.

Observe the Coefficient of Determination in the Evaluation module is better than what we got from Linear Regression experiment. We have barely started tuning this very powerful algorithm!

Let us try another algorithm: Boosted Decision Tree or Neural Network. Just use them with their default settings and observe the results.

Key take aways from Experiment 3:

- The experiment used a closer to real life workflow including missing data, data transformation and split dataset.

- Introduced the practice of trying different algorithms to fit models for the same problem and how to compare the results

- The opportunities to tune each model using algorithm related settings aka Hyperparameters

Hope you enjoyed this session. Continue practicing with more datasets. There are several in Azure ML repository itself and many more on Kaggle.

Datasets used in this demo

- Download google drive link:

Pingback: No Code ML: Deploying a model and testing

Pingback: Experience “no code” ML and AI